Rhyme

Managed memory leaks.

Everything

Neatly tucked away.

The poets freed

By print.

Hackers

Tamed themselves.

Linting,

Hinting, diff-printing

Bolt cutters:

vibe-

image: Rain, Steam and Speed

Rhyme

Managed memory leaks.

Everything

Neatly tucked away.

The poets freed

By print.

Hackers

Tamed themselves.

Linting,

Hinting, diff-printing

Bolt cutters:

vibe-

image: Rain, Steam and Speed

Earlier this year I joined the contraptions book club. It is my first ever book club, so I didn’t expect to enjoy it as much as I have. I have tried and failed over the past 10 years to properly start reading again but could never get beyond maybe 3-4 books at a stretch. Since March I think I’ve read about 3-4 books per month.

Every generation worries that ‘kids these days’ don’t read. Online, the debate cycles endlessly — are we reading less, or just reading differently? I used to be in the reading differently camp. After all, social media, blogs, emails, chats etc were all text and I was not just reading but also producing a hell of a lot of it. I have changed my mind. I used to think I was reading plenty — scrolling through blogs, threads, and chats. But that wasn’t reading; it was more like letting highway billboards flash through my mind. As a formerly heavy reader it feels super weird to say this to myself, but books are different. Books are dwellings. Books are places.

Authors take great (sometimes monumental) amounts of effort to explore a topic. No chat, forum, or social media post can ever come close to that. This is true not just because of length but more so because writing a book is a lot of effort. A post demands little effort; a book demands devotion. That difference shows.

Now that I’m reading again, it is apparent that those who read books are those that really want to read. And the act of reading, dwelling in those ideas is as refreshing as hike in the mountains. What has been especially invigorating about the book club is the shared events and cast of characters that have appeared across them. Each book becomes a room I walk through with others — and when we meet to talk, it feels like discovering a doorway between those rooms.

Couple of changes:

What is this urge that makes us want to be seen as something we aren’t. Take this blog, for example. I am in no way a writer. Barely even a proper blogger. My professional life has very little of this kind of writing. Scientific and investor communication, sure; but not this. Why do I have — and always have had — this urge to be, and be seen, as creative? Is this some kind of performative, effortless polymathism?

Perhaps the desire is to be a modern Renaissance man. In of Montaigne’s essays is the following passage:

“They would rather talk at length about other people’s trade, instead of their own, and so hope to be seen as accomplished in yet another field. Like when Archidamus faulted Periander for abandoning his reputation as a good doctor to acquire one as a bad poet.

See how Caesar goes out of his way to make us understand his ingenuity in building bridges and siege weapons. And, conversely, how much he refrains from talking about the responsibilities of his profession, his courage, and how he led his troops. His deeds prove he was an excellent officer. He wants to be known as an excellent engineer, an entirely different occupation!

Dionysus the Elder was a great military leader, as fortune would have him. But he did everything he could to be known mainly through poetry, although he knew little of it.”

Montaigne, if not a “Renaissance man”, is a man of the Renaissance. Yet he quotes even older examples of this urge. We have leaders who are CEOs or investors and want to be known or seen as being accomplished engineers or physicists. Fields they are rather bad at. Perhaps there is a common kind of mania here. Maybe it takes hold in the minds of the mover and shakers of history. But what of us not of a geologic character?

I don’t think this applies to us regular folks. Hobbies and deep interests do provide something critical however. Happiness. I don’t really care much about being seen as an expert in writing, making pretty plots, or even performing some AI-for-biology contortion. I would like to know how to do it and how to do it well. I am led by the pleasures of intense curiosity. That is better company than Caesar, I assure you.

Maria Popova writes in one of her wonderful essays on Bertrand Russell:

‘In my darkest hours, what has saved me again and again is some action of unselfing — some instinctive wakefulness to an aspect of the world other than myself: a helping hand extended to someone else’s struggle, the dazzling galaxy just discovered millions of lightyears away, the cardinal trembling in the tree outside my window. We know this by its mirror-image — to contact happiness of any kind is “to be dissolved into something complete and great,” something beyond the bruising boundaries of the ego.’

By the end of 2023, I was in proper burnout.*1 It wasn’t until I was able to focus my mind on reading new things that recovery felt possible. Earlier this year I joined the Contraptions book club and that complete focusing of attention has buoyed my mental state even higher. Enough to write regularly and to be ever more creative at my day job.

So, I guess, the Nobel-winning philosopher and mathematician did know a thing or two when he decided to write a book with the title in The Conquest of Happiness.

“The secret of happiness is this: let your interests be as wide as possible, and let your reactions to the things and persons that interest you be as far as possible friendly rather than hostile.”

I’ve long used quarto and github pages to post in my studio. It works great. For many years I have also tried to publish my wiki/digital garden but it always kind of sucked.

I had tried free programs like logseq and foam, and they were ok, but overloaded for my tastes. I like simple markdown files. Obsidian! Roam! I hear you, but no, I’m not going to be paying either to let me have control over data I generate.

Hence two intertwined issues persisted:

Impossible to solve…. Or was it?

There is this company called Cloudflare. You may have heard of it. If not, you’ve certainly been affected by it. They are a critical piece infrastructure for the internet. They also happen to have some interesting offerings.

One of them is cloudflare pages. The service is very much like github pages they enable hosting of static sites using various git repos, including github. You can specify a branch to build the site from and they take care of the rest. They can even use private repos… yay!

This solves problem 2. If nothing else, I can take markdown notes and have the conveniences of git and just lock it all behind a password thing that cloudflare also provides.

I almost set this up… but the settings page on cloudflare gave me an idea.

They allow subdomains to also be password protected. This turned out to be just the thing I needed. Now my wiki has a public section and a one-time-password protected private section for my daily notes.

I’ve logged my approach here: Deploying Cloudflare pages and setting passwords

Writing (almost) every day for the past month has been exhausting. It slowed down me reading significantly to say the least. But it proved a point to myself, that I can.

I’ll be trying to write slightly longer posts maybe once or twice a week. I still don’t feel that I’m in the proper writing mode yet that I can stick to it if I take a month to write a long high-quality essay. I’m aiming for mediocre, but done.

Here’s some links

Back to the Future:

Seems RSS and taking responsibility for your corner of the internet is becoming a thing, again.

https://www.wired.com/story/a-new-era-for-wired-that-starts-with-you/

https://www.theverge.com/bulletin/710925/the-verge-is-getting-way-more-personal-with-following-feeds

https://www.citationneeded.news/curate-with-rss/

https://daringfireball.net/linked/2025/08/03/how-to-leave-substack

https://www.wired.com/story/rss-readers-feedly-inoreader-old-reader/

https://gilest.org/notes/rss-feels.html

Podcast: Staying Decent in an Indecent Society with Ian Buruma:

https://hac.podbean.com/e/staying-decent-in-an-indecent-society-with-ian-buruma-bonus-episode/

Be Cheerful and Live your life

https://www.openculture.com/2025/07/archaeologists-discover-a-2400-year-old-skeleton-mosaic.html

The Always Wonderful Bluey Teaches Resilience:

https://theconversation.com/researchers-watched-150-episodes-of-bluey-they-found-it-can-teach-kids-about-resilience-for-real-life-262202

Look, we really really can’t go back in time:

https://www.quantamagazine.org/epic-effort-to-ground-physics-in-math-opens-up-the-secrets-of-time-20250611/

It was a small announcement on an innocuous page about “spring cleaning”. The herald, some guy with the kind of name that promised he was all yours. Four sentences you only find because you were already looking for a shortcuts through life. A paragraph, tidy as a folded handkerchief, explained that a certain popular reader of feeds was retiring in four months’ time. Somewhere in the draughty back alleys of the web, a small god cleared his throat. Once he had roared every morning in a thousand offices. Now, when people clicked for their daily liturgy, the sound he made was… domesticated.

He is called ArrEsEs by those who enjoy syllables. He wears a round orange halo with three neat ripples in it. Strictly speaking, this is an icon1, but gods are not strict about these things. He presides over the River of Posts, which is less picturesque than it sounds and runs through everyone’s house at once. His priests are librarians and tinkerers and persons who believe in putting things in order so they can be pleasantly disordered later. The temple benches are arranged in feeds. The chief sacrament is “Mark All As Read,” which is the kind of absolution that leaves you lighter and vaguely suspicious you’ve got away with something.

There was a time the great city-temples kept a candle lit for him right on their threshold. The Fox of Fire invited him in and called it Live Bookmarks.2 The moldable church, once a suit, then a car, then a journey, in typical style stamped “RSS” beside the address like a house number. The Explorer adopted the little orange beacon with the enthusiasm of someone who has been told there will be cake. The Singers built him a pew and handed out hymnals. You could walk into almost any shrine and find his votive lamp glowing: “The river comes this way.” Later, accountants, the men behind the man who was yours, discovered that candles are unmonetizable and, one by one, the lamps were tidied into drawers that say “More…”.

ArrEsEs has lineage. Long before he knocked on doors with a bundle of headlines, there was Old Mother Press, the iron-fingered goddess of moveable type, patron of ink that bites and paper that complains. Her creed was simple: get the word out. She marched letters into columns and columns into broadsides until villages woke up arguing the same argument.3* ArrEsEs is her great-grandchild—quick-footed, soft-spoken—who learned to carry the broadsheet to each door at once and wait politely on the mat. He still bears her family look: text in tidy rows, dates that mind their place, headlines that know how to stand up straight.**

Four months after the Announcement, the big temple shut its doors with a soft click. The congregation wandered off in small, stubborn knots and started chapels in back rooms with unhelpful names like OGRP4. ArrEsEs took to traveling again, coat collar up, suitcase full of headlines, knocking on back doors at respectable intervals. “No hurry,” he would say, leaving the bundle on the step. “When you’re ready.” The larger gods of the Square ring bells until you come out in your slippers; this one waits with the patience of bread.

Like all small gods, he thrives on little rites. He smiles when you put his name plainly on your door: a link that says feed without a blush. He approves of bogrolls blogrolls, because they are how villages point at one another and remember they are villages. He warms to OPML, which is a pilgrim’s list people swap like seed packets. He’s indulgent about the details—/rss.xml, /atom.xml, /feed, he will answer to all of them—but he purrs (quietly; dignified creature) for a cleanly formed offering and a sensible update cadence5.

His miracles are modest and cannot be tallied on a quarterly slide. He brings things in the order they happened. He does silence properly. The river arrives in the morning with twenty-seven items; you read two, save three, and let the rest drift by with the calm certainty that rivers do not take offense. He remembers what you finished. He promises tomorrow will come with its own bundle, and if you happen to be away, he will keep the stack neat and not wedge a “You Might Also Like” leaflet between your socks.

These days, though, ArrEsEs is lean at the ribs. The big estates threw dams across his tributaries and called them platforms. Good water disappeared behind walls; the rest was coaxed into ornamental channels that loop the palace and reflect only the palace. Where streams once argued cheerfully, they now mutter through sluices and churn a Gloomwheel that turns and turns without making flour—an endless thumb-crank that insists there is more, and worse, if you’ll just keep scrolling. He can drink from it, but it leaves a taste of tin and yesterday’s news.

A god’s displeasure tells you more than his blessings. His is mild. If you hide the feed, he grows thin around the edges. If you build a house that is only a façade until seven JSters haul in the furniture, he coughs and brings you only the headline and a smell of varnish6. If you replace paragraphs with an endless corridor, he develops the kind of seasickness that keeps old sailors ashore. He does not smite. He sulks, which is worse, because you may not notice until you wonder where everyone went.

Still, belief has a way of pooling in low places. In the quiet hours, the little chapels hum: home pages with kettles on, personal sites that remember how to wave, gardeners who publish their lists of other gardeners. Somewhere, a reader you’ve never met presses a small, homely button that says subscribe. The god straightens, just a touch. He is gentler than his grandmother who rattled windows with every edition, but the family gift endures. If you invite him, tomorrow he will be there, on your step, with a bundle of fresh pages and a polite cough. You can let him in, or make tea first. He’ll wait. He always has.

Heavily edited sloptraption.

As part of the Contraptions book club we will be reading the Essays of Montaigne. I actually started to read the Donald Frame translations, but felt I needed more context. In the book club, Paul Millerd had recommended Sarah Blackwell’s book on the life of Montaigne. I just finished it and I was left feeling rather warm.

In contrast I was left rather cold and unsure by a recent podcast on a recent book by Byung-Chul Han. The book is titled The Crisis of Narration and covers the idea that we have lost the ability to tell good stories. Stories, Han says, create a shared reality instead stories have been turned into a commodity to create consumers. Storytelling has become storyselling. As far as I know, Han doesn’t offer any solutions. Social media has turned a dark corner but it would have been nice to know what we can do, if anything. Montaigne seems to offer some relief.

Being literally the first person to write essays, and btw a cat’s person, Montaigne writes in a way that one could think of as storyselling. But you look deeper and it turns out to not be the case. He writes in a frank and meandering way that reminds of the old internet. Dead for 500 years, M seems more real as a person than the influencers ever could.

Now I just happen to have come across these two sources in a temporal coincidence, so, to quote Montaigne, what do I know, but writing and thinking like Montaigne could be the antidote to Han’s doom. Maybe we don’t need a global story thread, but knowing about how you thwarted the bugs in your balcony garden would create a sense of liveness that social media has stolen from us.

I’ll be reading Don Quixote and Montaigne’s essays over the next two months and I’m certain my views will change. Right now, I’m thinking having the average, mediocre, lens to life will take us through these dark days.

I leave you with two wonderful quotes (obviously about cats) from MdM:

“When I play with my cat, who knows whether she is not amusing herself with me more than I with her.”

“In nine lifetimes, you’ll never know as much about your cat as your cat knows about you.”

start tiny and scale fast without vendor lock-in

All biotech labs have data, tons of it. The problem is the same across scales. Accessing data across experiments is hard. Often data simply gets lost on somebody’s laptop with a pretty plot on a poster as the only clue it ever existed. The problem is almost insurmountable if you try to track multiple data types. Trying to run any kind of data management activity used to have large overhead. New technology like DuckDB and their new data lakehouse infrastructure, DuckLake, try to make it very easy to adopt and scale with your data. All while avoiding vendor lock-in.

High-content microscopy, single-cell sequencing, ELISAs, flow-cytometry FCS files, Lab Notebook PDFs—today’s wet-lab output is a torrent of heterogeneous, PB-scale assets. Traditional “raw-files-in-folders + SQL warehouse for analytics” architectures break down when you need to query an image-derived feature next to a CRISPR guide list under GMP audit. A lakehouse merges the cheap, schema-agnostic storage of a data lake with the ACID guarantees, time-travel, and governance of a warehouse—on one platform. Research teams, at discovery or clinical trial stages, can enjoy faster insights, lower duplication, and smoother compliance when they adopt a lakehouse model .

DuckLake is still pretty new and isn’t quite production ready, but the team behind it is the same as DuckDB and I expect they will deliver high quality as 2025 progresses. Datalakes or even lakehouses, are not new at all. Iceberg and Delta pioneered open table formats, but still scatter JSON/Avro manifests across object storage and bolt on a separate catalog database. DuckLake flips the design: all metadata lives in a normal SQL database, while data stays in Parquet on blob storage. The result is simpler, faster, cross-table ACID transactions—and you can back the catalog with Postgres, MySQL, MotherDuck, or even DuckDB itself .

Psst… if you don’t understand or don’t care what ACID, manifests, or object stores mean, assign a grad student, it’s not complicated.

In his substack post today, Venkatesh Rao wrote about reading and writing in the age of LLMs as playing and making toys respectively. In one part he writes about how the dopamine feedback loop from writing drove his switch from engineering to writing. For him, writing has ludic, play-like, qualities.

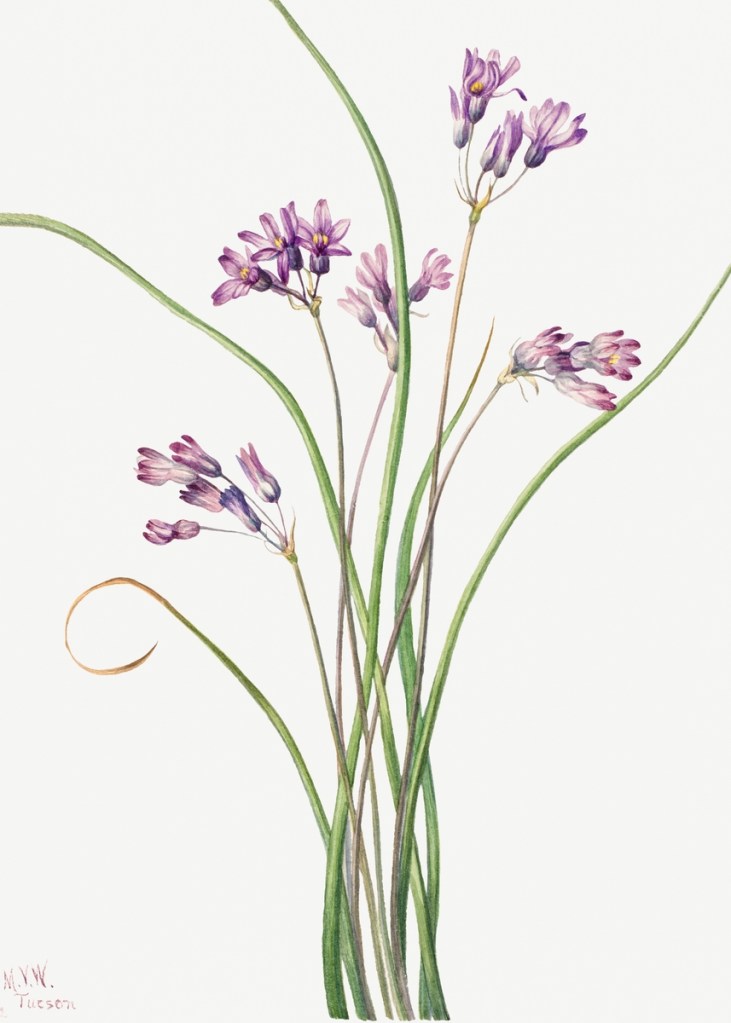

I have made almost all my “career” decisions as a function of play. I originally started off with a deep love of plants, how to grow them and their impact on the world. I was convinced I was going to have a lot of fun. I did have some. My wonderful undergrad professor literally hand held me through my first experiments growing tobacco plants from seeds. But that was about it. My next experiment was with woody plants and growing the seeds alone took 6 months, and by the end I had 4 measly leaves to experiment with. I quickly switched to cell biology.

This one went a bit better and I stayed with the medium through PhD. Although I was having sufficient aha moments, I knew in the first year that it was still a bit slow. What rescued me was my refusal to do manual analysis. I loved biology but I refused to sit and do analysis manually. Luckily, I had picked up sufficient programming skills.

I could reasonably automate, the analysis workflow. It was difficult at first but the error messages came at the rate I needed them to. I found new errors viscerally rewarding, it was now in game territory. The analysis still held meaning, it wasn’t for some random A/B testing or some Leet code thing. No, this mattered.

Machine learning, deep learning, LLMs, and their applications in bio continue to enchant me. I can explore even more with the same effort and time. I interact with biology at the rate of dopamine feedback I need. I have found my ludic frequency.

Years ago, maybe a decade even, I fell in love with this software called Scrivener. I could never justify buying it because I didn’t actually write. But having that software would represent a little bit of the identity I would like to have, a writer. The Fourth of July long weekend gave me a running start. The plan was to write every day for a month. If I did, I would buy Scrivener. This was going quite well, then I couldn’t write for two days.

I had fallen off the wagon. But hey, I have a wagon. Writing for twenty days isn’t nothing. Like David Allen says, getting back on the wagon is what it’s all about. Falling off happens because life happens. And life, happens to everybody. So, hey I’m back.

I almost wasn’t. I almost said oh well. Then I watched the Summer of Protocols (SoP) town hall talk by Robert Peake: The Infinite Game of Poetry – Protocols for Living, Listening, and Transcending the Rules. The infinite game of poetry is the infinite game of writing. The important bit is to keep playing*.

Being that this is part of the SoP, the question is of course what is the protocol? Robert goes much deeper than just the protocol of writing poetry and being a poet. He gives two equations for doing your life’s work and to build the self. I won’t reproduce those equations here, you should watch the talk.

Here’s the gist of the poeting/writing protocol though:

Tyler Cowen, who if nothing else, is a prolific wrote a similar, though not as compact, set in 2019.

Zooming out, this applies to all work not just writing. Showing up and getting back on the wagon is where it all coalesces. But where am I going? To me, building wagons is as important as going somewhere with potential for something new, even if the path is uncertain. Pointing in the direction of maximal interestingness .

This need for exploration and the support from constancy is captured well in the song Life in a Wind :

“One foot in front of the other, all you gotta do, brother

[…]

Live life in the wind, take flight on a whim”

* The Scrivener team seems to understand this well. Their trial isn’t a consecutive thirty days, but thirty days of use 🙂